The sign of intelligence is that you are constantly wondering. Idiots are always dead sure about every damn thing they are doing in life.” ― Jaggi Vasudev

Good advice, indisputable truths, definitive definitions…

I have had a problem with all of this lately. I have found that the more we generally agree that something is 100% truth and definitely ultimate, the more I want to dispute that theory.

This was sort of my private hobby, which irritated my friends and family (when they were for welfare, I was for freedom. When they were for development, I was for taxes), but as it turns out, this does not happen with me alone – and people who are similar have been pigeonholed and labeled “contrarians”.

In short, a contrarian is a person who is against some generally accepted view. My case is a bit different, because instead of opposing general views, I increasingly oppose my own views.

In other words, I have doubts.

I’ve noticed this pattern while reading books. And the amazing thing is the fact that you only need to write something on a piece of paper and it immediately becomes truth which every reader can wholeheartedly accept or completely reject it – as if only two states existed: truth and falsehood.

However, the older (the more experienced?) I get, the more clearly I can see that the spectrum between one and the other is becoming wider. This makes me less radical.

I used to think that something can be 100% good or bad; no middle ground or gray areas, but the longer I live, the more options and possibilities I see. What does it mean? It means that I am more willing to give up the need to state facts (“you should do A”) and instead describe my personal experience (“for me A worked really well”).

As humans, we like strong opinions – they give us confidence, stability, and a sense of being right. Strong opinions are also easy to communicate and quickly find a sympathetic ear. Finding simple solutions to complicated problems is also sexy. This is why productivity gurus, populist politicians, and startup mentors are so popular.

The problem is that most things don’t have simple solutions.

First, in our complicated world, hardly anything has any solution. Yes, I’m stepping on a philosophical turf, but bear with me and think about it: can we possibly solve any problem to a conclusive end? One can never find true solutions for really fascinating problems (I have recently heard this from Merlin Mann in his Reconcilable Differences podcast).

Secondly, it is very unlikely that our solution is ever 100% good…

What if it is 90% good? Or 70% good, 20% neutral, and 10% bad? This situation was beautifully described by Isaac Asimov in a 1989 essay titled the “Relativity of wrong”, where he shows how small the difference is between thinking that the earth is flat; thinking that it is spherical, or that it is an evenly flattened ellipsoid (which are all wrong assumptions by the way!). All depends on the accuracy of our measurements.

Third: What do we get from the conviction that we chose a good solution? Lately, I’ve been fascinated with Tom Gilb’s ideas and work methods (here is his website straight from 1998).

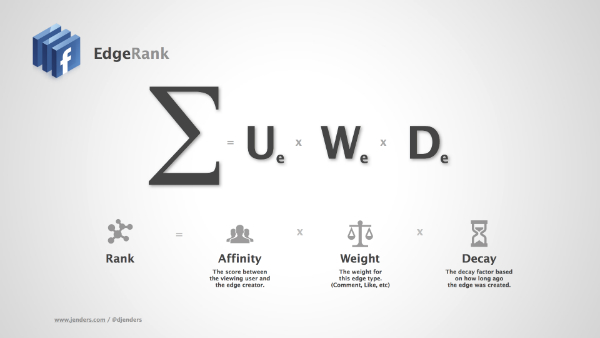

Gilb promotes a tool for strategic thinking about product development which is called Impact Estimation Table, ITE, (link to PDF article). This table is categorized into rows for your goals (e.g. getting more purchases in our online store) and columns for the possible methods of achieving these goals (such as “let’s make a mobile website”).

After, we evaluate what is the impact of each method on each goal (let’s assume that some method has 60% of positive impact on a scale from -100% to +100%). One of the unique elements of ITE is the fact that you must evaluate your Confidence. You will have to determine how strong your convictions are. For example, through the table we can determine if our mobile website will have +60% impact on getting more purchases in our store and start to assess how strong our conviction are that this will actually happen?

In ITE we are shown how to use a scale that measures from 0 – a case of total uncertainty where no one has ever executed that task before; e.g. a manned flight beyond the solar system – to 1, which is a situation where humans have vast experience from repetitive activity; such as the process involved in making our 1000th hand-made Ikea chair.

In the process of finding out our level of certainty or confidence, we are presented with a number of options between the ranges in view. For example, at 0.5 it can be that someone else has performed that activity and you want to make enquires about his or her experiences; or your confidence level may be 0.7 in which case you may have some personal experience but with a different set of conditions.

All of this makes my once-categorical opinions increasingly changeable. It does not mean that I feel reluctant to clearly voice my opinions. I just simply have less and less confidence in them. Even on topics in which I’m supposed to be an expert (hey guys, I wrote a book!).

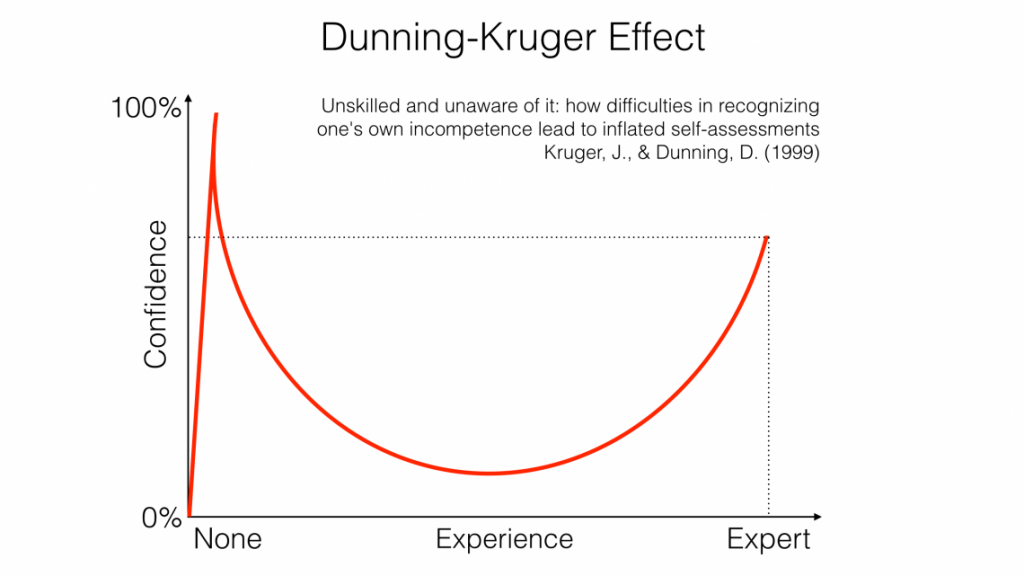

I also have a feeling that the majority of my categorical opinions have been expressed when I had no substantive basis to do so. This phenomenon is called “Dunning-Kruger Effect”.

I would like to believe that my current experiences are just sliding down the slope of certainty after climbing the summit of ignorance.

“Mr Pipo face or vase” by Silhouette_Mr_Pipo.svg: Nevit Dilmen (talk)derivative work: Nevit Dilmen (talk) – Silhouette_Mr_Pipo.svg. Licensed under CC BY-SA 3.0 via Wikimedia Commons.