As generative AI tools like Undress continue encroaching on internet users’ right to privacy, regulations struggle to keep up with innovation.

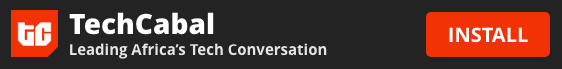

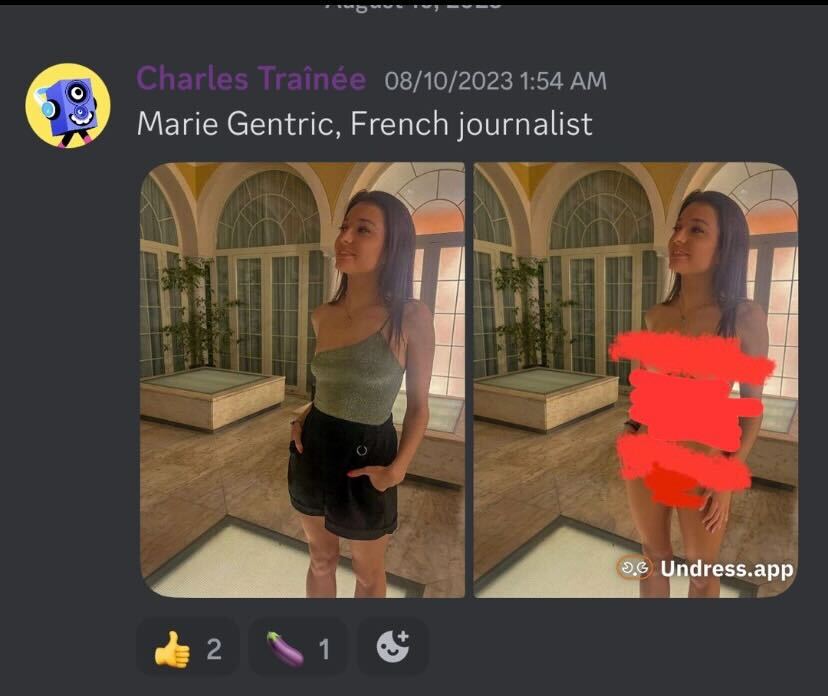

In one month, the Undress app hit over 7.6 million visits, with users spending 21 minutes per session. Users of TikTok, one of the world’s most engaging social media platforms, spend an average of over 10 minutes per session on the app. Undress is a generative AI tool that allows users to input a picture of anyone and get, in return, an image with that person’s clothes removed. Additionally, the site will enable users to input their specifications of preferred height, skin tone, body type and so on. It can upload the photo of their choice and create a deep nude of the person in the uploaded picture.

Over the last three months, Undress’s global ranking has increased from 22,464 to 5,469. On search engines, the keywords “undress app” and “undress ai” have a combined search volume of over 200,000 searches per month, showing the level of demand for the tool.

On its website, whose tagline is “Undress any girl for free,” the app’s creators disclaim that they “do not take any responsibility for any of the images created using the website.” This means that victims whose pictures have been undressed without consent cannot contact the site for complaints or requests for removal.

Some reports also state that fraudulent loan apps gain access to a person’s gallery and then use tools like Undress to morph nude images of users and then use them to extort money from them.

According to the Economic Times, Undress is but one generative AI application in a cesspool of similar tools. Google Trends has classified such sites as ‘breakout’ searches which means that they have seen a ‘tremendous increase, probably because these queries are new and had few searches prior to the boom of generative AI tools.

The bad news for victims is that these tools will only keep getting better, on top of not having an avenue to prevent the nonconsensual use of their images. According to one expert, it will eventually reach a point where the resulting photos are so convincing that there is no way to differentiate them from real photos.

“Children between the ages of 11-16 are particularly vulnerable. Advanced tools can easily morph or create deepfakes with these images, leading to unintended and often harmful consequences. Once these manipulated images find their way to various sites, removing them can be an arduous and sometimes impossible,” said public policy expert Kanishk Gaur.

According to Jaspreet Bindra, founder of Tech Whisperer, a technology advisory firm, regulation should start with having ‘classifier’ technology distinguishing between genuine and fake.

“The solution has to be two-pronged—technology and regulation,” he said. “We need to have classifiers to identify what is real and what is not. Similarly, the government must mandate that something AI-generated should be clearly labeled as such.”

With debates about how a regulatory framework for AI would look still raging on, generative AI tools like Undress show the need to expedite this process. Deepfakes have already exhibited the harm they can do in spreading misinformation and fake news in the political sphere, and tools like Undress show that this threat is now moving from just affecting politicians, celebrities, and influencers to everyday people, especially women who innocently upload their images to their social media profiles.