By Jessica Aletor

The race toward Artificial General Intelligence, often referred to as AGI, is accelerating. From Silicon Valley boardrooms to startup hubs across Lagos and Nairobi, the spotlight remains firmly on model capability, larger architectures, more parameters, and increasingly human-like outputs.

But beneath the hype lies a fragile reality. The world’s most advanced AI systems are only as reliable as the data infrastructures that sustain them. Without that foundation, AGI isn’t progress. It is performance.

I argue that the biggest risk in the AGI race isn’t model capability. It is the fragility of the data systems beneath it. We are building Ferraris but fuelling them with low-grade kerosene.

As the industry pushes toward AGI, we are confronting a hard truth. Even the most advanced models become operational illusions when the data underpinning them lacks integrity. The real battle for the future of AI is no longer just in the code. It is in the production grade data operations that determine whether these systems are safe, reliable, and scalable.

Beyond “Cleaning”: The Rise of the Data Architect

For years, data preparation was treated as a secondary task. The unglamorous work of cleaning spreadsheets and formatting datasets. In the era of generative AI, that mindset is obsolete. Today, we do not just clean data. We architect it

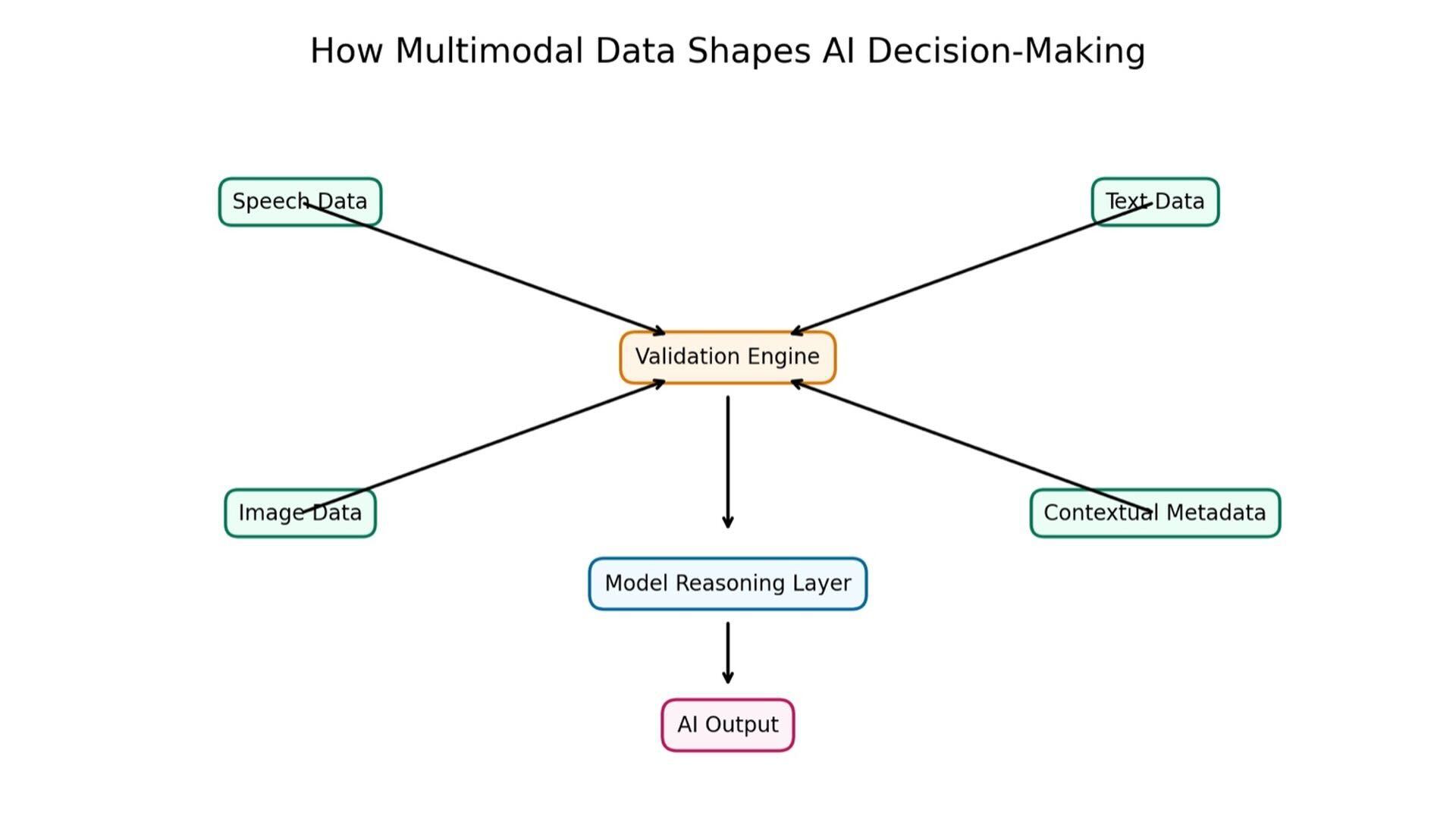

Working across large-scale AI production environments, I have seen firsthand that the transition from research prototype to production ready system demands a level of data rigour most organisations underestimate. When multimodal datasets involving text, image, and speech intersect, the complexity grows exponentially.

The question is no longer how much data you have, but how robust your validation frameworks are. If training data is time dependent, culturally narrow, or contextually flawed, the resulting AI does not just fail. It misleads.

This is where the role of data architects becomes foundational. Not as a support function, but as a core driver of reliability, safety, and performance.

The Economic Cost of “Dirty” Data

This challenge is not theoretical. It carries significant commercial and institutional consequences.

In high stakes financial environments, I have seen how data led prioritisation strategies contributed to the recovery of over £400 million in distressed portfolios simply by improving reliability and decision-making frameworks. Conversely, weak data governance can destabilise entire programmes, threaten funding pipelines, and erode organisational trust.

Across African markets, the stakes are even higher. As AI becomes embedded in fintech, agritech, and public sector infrastructure, the principle of garbage in and garbage out becomes a matter of economic resilience.

Where local language data, informal economies, and fragmented digital records shape decision making, weak data governance does not just create technical failure. It risks institutional mistrust. The future of AI on the continent will depend less on model size and more on whether we invest in resilient, locally grounded data infrastructure.

The Sentinel Approach: Governance as a Production Discipline

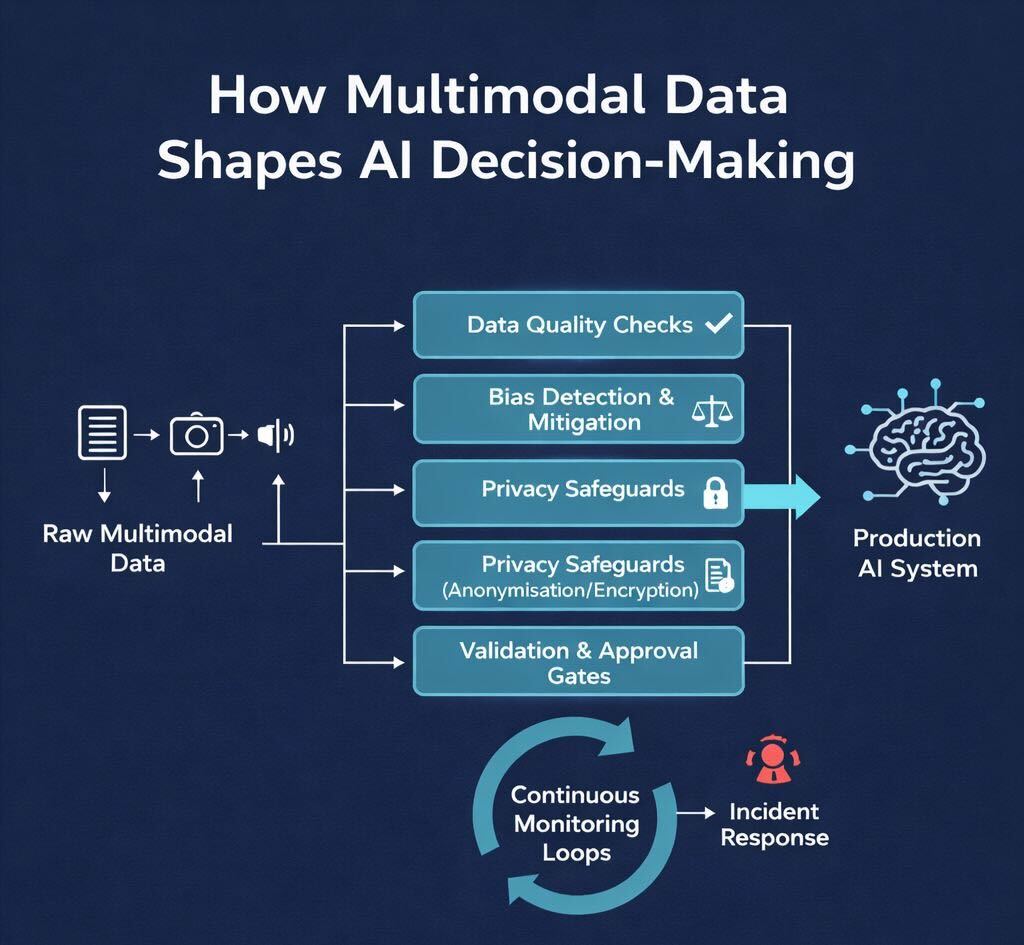

To move beyond the AGI illusion, the tech ecosystem must shift toward what I describe as the Sentinel Approach. A production first philosophy where governance, validation, and accountability are embedded into data architecture before models ever reach deployment.

This means:

Automation readiness. Moving away from reactive manual interventions and toward real time monitoring pipelines that detect anomalies before they scale.

Multimodal rigour. Designing validation systems that account for linguistic nuance, regional context, and cultural diversity. Whether an AI is interpreting a Nigerian accent or analysing regulatory text.

Governance as a feature, not a constraint. Privacy, compliance, and accountability frameworks must be engineered into the data pipeline from the outset, not layered on after deployment.

The organisations that adopt this mindset will not just build smarter AI. They will build trustworthy AI.

The Future Is Foundation First

The next decade of innovation will not be defined by who has the flashiest demo or the most impressive model benchmarks. It will be defined by who has the most resilient data infrastructure.

We must stop viewing data specialists as the support crew behind AI and start recognising them as the architects of intelligence itself.

The future of AI will not be built by models alone.

It will be built by the people who design, govern, and protect the data that makes intelligence possible.

About the author

Jessica Aletor is an AI data specialist working at the intersection of multimodal model development, data governance, and production AI systems. Her background spans government, financial data strategy and large-scale AI operations, where she has focused on designing validation frameworks and resilient data infrastructures that enable intelligent technologies to move from experimentation into safe, real-world deployment across global and emerging markets.

Her work explores how data architecture shapes the reliability, safety, and long-term impact of intelligent systems.