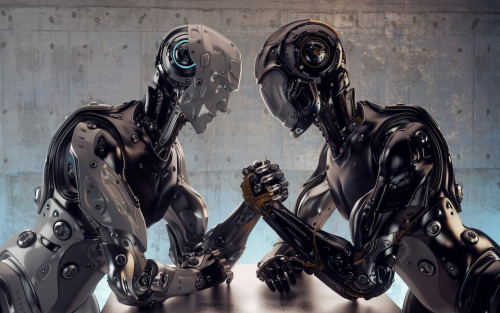

We must consider the key moral and policy questions around artificial intelligence and cyborg technologies to ensure our innovations don’t destroy us.

How much do we really know about the impact of scientific breakthroughs — on technology or on society? Not enough, says Marcelo Gleiser, the Appleton Professor of Natural Philosophy and a professor of physics and astronomy at Dartmouth College.

As someone who explores the intersection between science and philosophy, Gleiser argues that morality needs to play a stronger role in innovations such as artificial intelligence and cyborg technologies due to the risk they could pose to humanity. He has described an artificial intelligence more creative and powerful than humans as the greatest threat to our species.

While noting that scientific breakthroughs have the potential to bring great harm or great good, Gleiser calls himself an optimist. But says in this interview that “the creation of a transhuman being is clearly ripe for a careful moral analysis.”

When it comes to understanding how to enhance humans through artificial intelligence or embedded technologies, what do you view as the greatest unknowns we have yet to consider?

At the most basic level, if we do indeed enhance our abilities through a combination of artificial intelligence and embedded technologies, we must consider how these changes to the very way we function will affect our psychology. Will a super-strong, super-smart post-human creature have the same morals that we do? Will an enhancement of intelligence change our value system?

At a social level, we must wonder who will have access to these technologies. Most probably, they will initially be costly and accessible to a minority. (Not to mention military forces.) The greatest unknown is how this now divided society will function. Will the different humans cooperate or battle for dominance?

As a philosopher, physicist and astronomer, do you believe morality should play a greater role in scientific discovery?

Yes, especially in topics where the results of research can affect us as individuals and society. The creation of a transhuman being is clearly ripe for a careful moral analysis. Who should be in charge of such research? What moral principles should guide it? Are there changes in our essential humanity that violate universal moral values?

For example, should parents be able to select specific genetic traits for their children? If a chip could be implanted in someone’s brain to enhance its creative output, who should be the recipient? Should such developments be part of military research (which seems unavoidable at present)?

You’ve cited warnings by Stephen Hawking and Elon Musk in suggesting that we need to find ways to ensure that AI doesn’t end up destroying us. What would you would as a good starting point?

The greatest fear behind AI is loss of control — the machine that we want as an ally becomes a competitor. Given its presumably superior intellectual powers, if such a battle would ensue, we would lose.

We must make sure this situation never occurs. There are technological safeguards that could be implemented to avoid this sort of escalation. An AI is still a computer code that humans have written, so in principle, it is possible to input certain moral values that would ensure that an AI would not rebel against its creator.

Could the AI supersede the code? Possibly, which is why some people are very worried. There could be a shutdown device unknown to the AI that could be activated in case of an emergency. This would need to be outside the networking reach of the AI.

Are there policies that can be put in place that could better ensure that scientific breakthroughs are used appropriately?

From a policy perspective, granting agencies should monitor the goals of the funding to make sure the intentions are constructive and that safeguards are implemented from the start. Governments should work in partnership with scientists and philosophers to maintain transparency and to implement humane operating procedures.

Progress in the control of new technological output should come not just at the national, but at the international level. Global treaties should ensure that cutting-edge research involving AI and transhuman technologies are implemented according to basic moral rules to protect the social order.

There is urgency in developing these rules of conduct. Let us not repeat the errors from climate change regulation — we must act before it’s too late.

Are you generally an optimist or pessimist about how humanity uses science?

I’m optimistic. The human drive to kill existed before science was here. It’s not a scientific problem — it’s a moral problem embedded in a species that evolved within a hostile environment and that is still unable to look beyond its origins. Humans will continue to use science to kill and to heal.

But I detect the emergence of a new mindset, one that seeks a higher moral ground. This mindset is a byproduct of a new global consciousness, a consequence of increasing knowledge of our cosmic position as molecular machines living in a rare planet that are capable of self-awareness. Science not only creates new machines and technologies, but also new worldviews. It’s time we moved one from our ancient tribal divides.

(Top image: Courtesy of Thinkstock)

Marcelo Gleiser is the Appleton Professor of Natural Philosophy and a professor of physics and astronomy at Dartmouth College. His latest book is “The Island of Knowledge.”

This post originally appeared on GE Reports.