In the age of generative AI and digital humans, what happens when the mirror doesn’t reflect you? When hair textures are straightened, skin tones lightened, locs or braids erased, and the “ideal” becomes unexpected? For many African creators, platforms, and audiences, these aren’t aesthetic quirks: they are questions of identity, dignity, and rights.

The visual-rights gap in the AI era:

Generative AI models are now capable of producing hyper-realistic or stylised human likenesses, from avatars to virtual influencers to synthetic spokespeople. Yet, many of these systems have been trained on datasets skewed toward Western phenotypes, straight hair textures, lighter skin, and standardised body types. The result: misrepresentations, erasures, and stereotyped visuals of people of African descent.

Such distortions don’t just look wrong; they erode trust, diminish representation, and risk violating fundamental rights to equality and human dignity. Regulators are paying attention. Under the EU AI Act, “high-risk” AI systems, including those that affect fundamental rights, must meet strict obligations. Although the Act is European, its global footprint matters for any company operating or serving African users.

When representation becomes a risk:

Consider a creative agency in Lagos that uses a generative-image model to produce marketing visuals. If the model inadvertently lightens skin tones, straightens textured Afro hair, erases baldness, or adds facial hair where none exists, the impact may appear subtle. But for the audience, the message is clear: a certain look is normal, others invisible or distorted.

In Africa’s context, rich in hair textures, a wide range of skin tones, and cultural hair practices like locs, braids, or headscarves, the risk of misrepresentation is higher if models are not tailored. Misrepresentation becomes structural. When organisations ignore this, they may face reputational damage, user distrust, or regulatory scrutiny (especially as policymakers explore AI fairness and representation frameworks

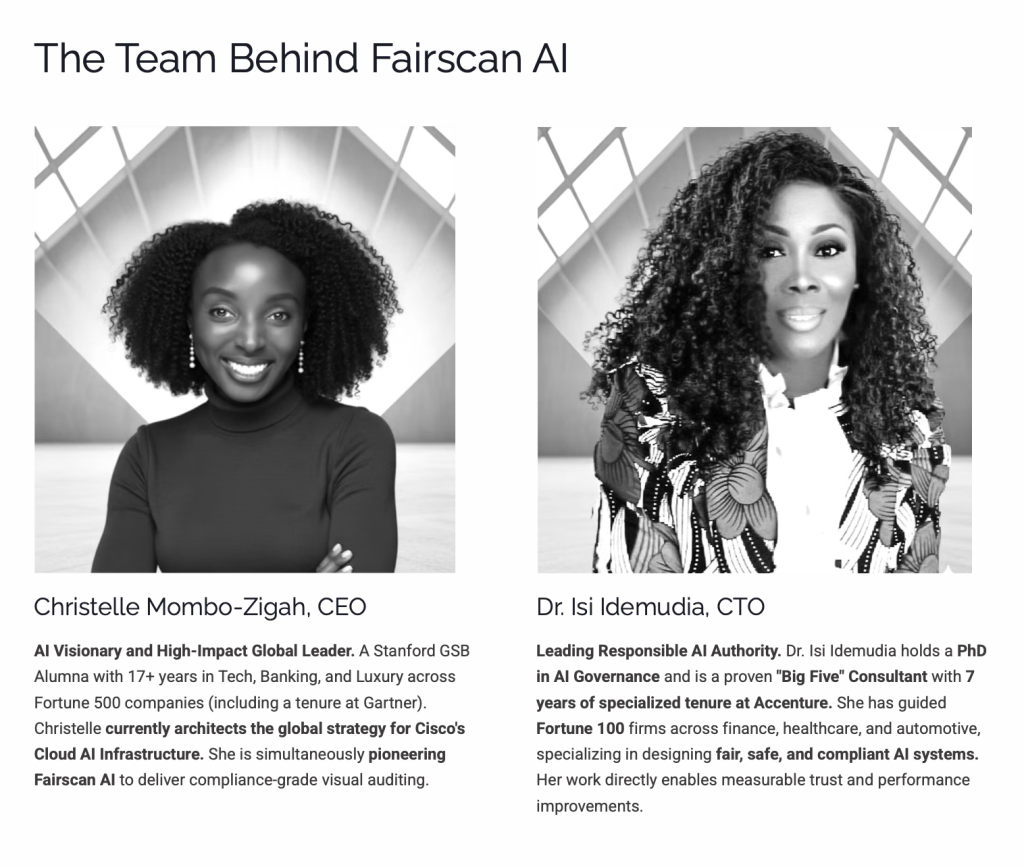

Meet Fairscan AI, the human-rights compliance partner:

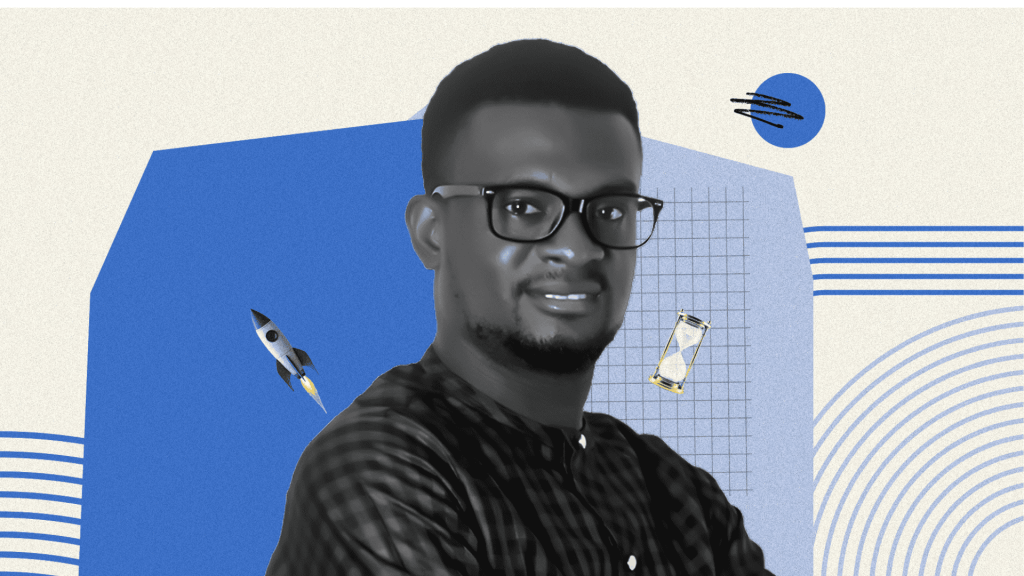

Enter Fairscan AI: a solution born from the intersection of representation bias detection and human-rights alignment. Previously focused on bias in visuals, skin tone, features, and hair texture, Fairscan AI is evolving. The new direction is mapping bias metrics directly to human rights obligations (non-discrimination, dignity, equality) and building a Human Rights Impact Assessment module for AI-generated visuals.

What does this mean in practice?

A Nigerian media platform can run its generative-visual pipeline through Fairscan AI’s audit: Are Afro-hair textures, skin tones, baldness, grey hair, facial hair, headscarves, locs properly represented? An African ad agency can receive a Human Rights Impact Score aligned with rights frameworks, showing where visuals may pose a risk of misrepresentation or erasure. Tech platforms seeking to serve African audiences can build a compliance trail: “We used Fairscan AI to audit our synthetic-human model for African phenotypes before release.” By treating visual representation as a human-rights challenge, not just a design or bias checkbox, Fairscan AI helps organisations move from nice-to-have inclusion to rights-aligned infrastructure.