The BackEnd explores the product development process in African tech. We take you into the minds of those who conceived, designed and built the product; highlighting product uniqueness, user behaviour assumptions and challenges during the product cycle.

—

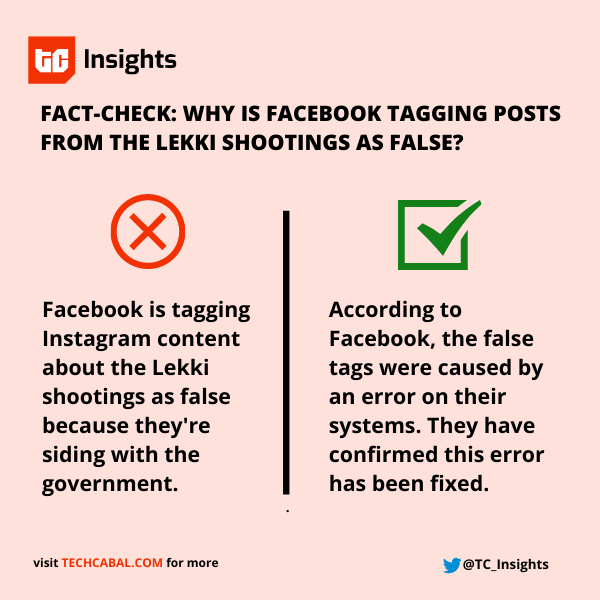

On Wednesday afternoon, Instagram users in Nigeria noticed something: when you upload the picture of the bloodied Nigerian flag, a fact check flags it as “False” news.

Less than a minute after posting the image, the following popped up:

“The same false information was reviewed in another post by fact-checkers. There may be small differences. Independent fact-checkers say this information has no basis in fact.”

But this was a wrong fact-check. The article Instagram provided to support the fact-check was written on October 19. The bloodied flag is from the tragic Army shootings that occured on October 20 during the #EndSARS protests in Lagos.

The fact-check article focused on analysing a CNN news chyron about “two deadly viruses killing Nigerians.” Apparently, some people (in the US) inferred there was an outbreak of SARS – the influenza virus – in Nigeria based on the CNN chyron.

Instagram misapplied this fact-check on a Nigerian event. The check was done by Check Your Fact, one of the organisations Facebook (which owns Instagram) outsources fact-checking to. Check Your Fact is affiliated with The Daily Caller, an American online news publisher.

This error highlights the weaknesses in social media platforms’ fight against misinformation and disinformation on their platforms. Facebook, the owners of Instagram, fixed the false flagging of the bloodied flag hours later and issued an apology.

“We are aware that Facebook’s automated systems were incorrectly flagging content in support of #EndSARS, and for this we are deeply sorry. This issue has since been resolved, and we apologize for letting down our community in such a time of need,” a Facebook spokesperson told TechCabal in an email.

Also on Wednesday, some people observed that Twitter had subdued the Retweet button a bit to constrain the speed with which tweets could go viral. CEO Jack Dorsey clarified that users could still retweet but Twitter now prompts users to read articles before retweeting links.

It isn’t surprising to see these platforms struggle with a consistent approach. In trying to constrain or exclude information, Facebook and Twitter are battling their core founding philosophies – connecting the world and giving everyone a voice.

After taking heat for enabling malicious actors – read Russians – in 2016, Facebook and Twitter want to avoid an encore ahead of the November 3 elections in the United States.

But this one-track focus isn’t favoring these platforms’ global users equally.

By having an American-first focus on their misinformation detection, Facebook and Twitter might fail to take the peculiar needs of African democracies into account.

How Facebook and Twitter’s algorithms spread news

Both platforms came online in the mid-2000s and have evolved a number of times, from simple messaging apps to full blown news platforms with editorial features.

Around February 2016, Twitter decided to start showing users content that was relevant to them. Before that, tweets on your timeline appeared strictly in a reverse-chronological order – the most recent came first.

Today, Twitter reminds you of tweets that have gained lots of engagement (likes, retweets) from people you follow. There’s a primal motivation here; that we are more likely to engage a piece of information if we see people we know have engaged with it.

On Facebook, the news feed is designed to be a “personalized, ever-changing collection of photos, videos, links, and updates from the friends, family, businesses, and news sources you’ve connected to on Facebook.

Facebook judges a post’s relevance to a user based on three criteria: who posted it, content type and interactions. The news you see most often is most likely a result of what your most active family member or friend engages with.

In summary, both platforms depend on AI-based algorithms for curating what users see. The algorithms are devised from an engine of deep learning and natural language processing techniques which try to understand the meaning and context of words. (AI junkies, find more details on Facebook and Twitter.)

Fact-checking in Africa: Missing links

To curb misinformation, Facebook says it now prioritizes “original reporting and stories with transparent authorship” on the news feed. Users also have the ability to control their news preferences.

In March, Twitter started adding labels or warnings to tweets that appeared to contain misleading, disputed or unverified claims. The move was prompted by a proliferation of misinformation on COVID-19 but has been applied to checking statements about US elections.

But who do both platforms rely on to determine what is verified or false? Let’s start with Facebook.

Facebook’s fact-check program relies on third-party organisations. In Nigeria, those partners include Africa Check (a fact-checking organisation), AFP (a news organisation) and Dubawa (affiliated to Premium Times, a Nigerian online news organisation).

In Africa, Facebook’s fact-check program is present in 15 countries, with AFP anchoring in each case. AFP and other country-specific partners identify, review and rate potential misinformation for Facebook to take action.

As this week’s events show, the presence of this Facebook structure isn’t an assurance that mistakes won’t be made when flagging news in Africa.

Twitter says they rely on “trusted partners [to] identify content that is likely to result in offline harm.”

When Twitter places labels on tweets they think are misleading, the labels link to pages curated by Twitter “or external trusted source containing additional information on the claims made within the Tweet.”

Unlike Facebook, Twitter does not say who these partners are. Considering the underdeveloped traditional media ecosystem in Africa, one becomes curious who Twitter relies on to guide its misinformation detection project in Africa.

This concern has become important during the #EndSARS protests. In the aftermath of recent killings attributed to the Nigerian Army, an outbreak of misinformation has threatened to reframe the public’s recollection of what happened.

Twitter has yet to take action on the Army’s Twitter account which brands credible reports of its complicity as “fake news.”

Labels and warnings have yet to be fully activated in Nigeria, probably because it’s not election season here. However, it would show serious attention to a significant user base for Twitter to begin paying attention to the particular use cases that make the platform useful on the continent.

Preserving social media in Africa

Nigeria’s #EndSARS movement has been mostly coordinated on Twitter, as has Namibia’s #ShutItAllDown campaign on violence against women.

Africans have come to rely on social media platforms to raise awareness about poor governance and other civil challenges. Eric Schmidt, Google’s former CEO, may think social networks “amplify idiots and crazy people” but these platforms have become the information railroads of first choice in Africa.

Where the Nigerian Broadcasting Corporation asks traditional media not to embarrass the government in their reporting, citizens take to social media to speak directly to the world.

Social media has become an essential service which is why fears of a total or partial internet shutdown are valid.

But this necessary role is also why the platforms have to put more work into understanding what Africans are talking about and fact-checking appropriately. With its plans for a Nigeria office staffed with engineers and policy people, Facebook might be on the path to doing better.